Automate Azure Sentinel Deployment

Azure Sentinel is a scalable, cloud-native, security information event management (SIEM) and security orchestration automated response (SOAR) solution. Azure Sentinel delivers intelligent security analytics and threat intelligence across the enterprise, providing a single solution for alert detection, threat visibility, proactive hunting, and threat response.

Like any other cloud services, you can automate most of the Azure Sentinel deployment and configuration. And in this post, you will learn how to automate the core components of Azure Sentinel.

Prerequisites

Before we start, there are few global prerequisites that you need to meet:

- Active Azure Subscription, if you don’t have one, create a free account before you begin.

- Contributor permissions to the subscription.

- PowerShell V7, if you don’t have it installed, install it from the GitHub Repository .

Azure Sentinel Automation tools

Bringing the right set of tools to the mission allows you to provide the best solution in the shortest time. Before you begin in your journey, spend some time getting familiar with the following tools:

PowerShell V7

PowerShell V7 is a cross-platform task automation and configuration management framework, consisting of a command-line shell and scripting language. Make sure you installed it on your system.

Az Module

Azure PowerShell Az module is a PowerShell module for interacting with Azure. Az offers shorter commands, improved stability, and cross-platform support.

To install it, you run the following command:

Install-Module az -AllowClobber -Scope CurrentUser

AzSentinel Module

AzSentinel is a module built by Wortel, and it will help us automate a few of the processes.

You install the AzSentinel Module with the following command:

Install-Module AzSentinel -Scope CurrentUser -Force

Splatting

In most of the code examples, I use “splatting” to pass the parameters. Splatting makes your commands shorter and easier to read. You can read more about it here.

Connect to Azure with PowerShell

You also need to set up a session to Azure from PowerShell, and you can create one with the Az module.

You need to get your Tenant ID and Subscription ID from the Azure Portal.

With this information, you can use the Connect-AzAccount to create a session with Azure:

$TenantID = 'XXXX-XXXX-XXXX-XXXX-XXXX'

$SubscriptionID = 'XXXX-XXXX-XXXX-XXXX'

Connect-AzAccount -TenantId $TenantID -SubscriptionId $SubscriptionID

You can now interact with Azure from PowerShell and start your journey to automate Azure Sentinel.

A Step by Step To a Fully Automated Deployment

Every automation process starts with multiple small automated processes. In this post, you will learn how to provision the following components with PowerShell:

- Resource Group

- Log Analytics

- Azure Sentinel

- Saved Queries

- Hunting Queries

- Alert Rules

- Playbooks

- Workbooks

Azure Log Analytics, Azure Sentinel, and Logic Apps are all paid services.

Each component is a piece in the puzzle that builds a fully up and running Azure Sentinel, ready to monitor every environment.

Resource Group

The resource group is a container that holds related resources for an Azure solution.

In Azure, you logically group related resources to deploy, manage, and maintain them as a single entity.

With the New-AzResourceGroup, you can create a new resource group.

Every resource in Azure requires a deployment location. The location is referring to the Datacenter region. In this guide, I will use the West Europe region.

$Parms = @{

Name = "Sentinel-RG"

Location = "WestEurope"

}

New-AzResourceGroup @Parms

Log Analytics

Log Analytics is a service that helps you collect and analyze data generated by resources in your cloud and on-premises environments. It gives you real-time insights using integrated search and custom dashboards to readily analyze millions of records across all of your workloads and servers regardless of their physical location.

Azure Sentinel run on Log Analytics workspace, and use it to store all security-related data. With that said, Log Analytics is the first resource we need to provision.

To create a new Log Analytics workspace, you can use the New-AzOperationalInsightsWorkspace.

$Parms = @{

ResourceGroupName = "Sentinel-RG"

Name = "Saggiehaim-Sentinel-WS"

Location = "WestEurope"

}

New-AzOperationalInsightsWorkspace @Parms

Azure Sentinel

After provisioning Log Analytics, you can continue and on-board Azure Sentinel.

Use the Set-AzSentinel to provision the Log Analytics Workspace:

$Parms = @{

SubscriptionId = $SubscriptionID

WorkspaceName = "Saggiehaim-Sentinel-WS"

}

Set-AzSentinel @Parms

Azure Sentinel Saved Queries

Until this point, you only provisioned “Infrastructure.” By enabling Azure Sentinel, you can now start the “configuration” part, and add content to your Azure Sentinel.

When we talk about SIEM and monitoring big data as an essential skill to have, it is the ability to extract the relevant information from the sea of data.

In Sentinel, you use the Kusto Language (KQL). With KQL, you can run queries inside Log Analytics, and write Sentinel Alerts rules, Hunting rules, Workbooks, and more.

Some queries can be significant and complex, and you don’t want to write to them again and again. You can save your time and keep your queries inside Log Analytics and use them on demand.

You can organize your saved query inside folders by using the Category switch.

You can push saved queries with the New-AzOperationalInsightsSavedSearch command:

$query = @"

// Number of requests

// Count the total number of calls across all APIs in the last 24 hours.

//Total number of call per resource

ApiManagementGatewayLogs

| where TimeGenerated > ago(1d)

| summarize count(CorrelationId) by _ResourceId

"@

$param = @{

ResourceGroupName = "sentinel-rg"

WorkspaceName = "Saggiehaim-Sentinel-WS"

SavedSearchId = "NumberofAPICallsPerResource"

## Name of the saved query

DisplayName = "Number of API calls per resource"

## The name of the Folder your want to store your saved query

Category = "API Managment"

Query = $query

Version = 1

Force = $true

}

New-AzOperationalInsightsSavedSearch @param

Another method is to use JSON or YAML files to hold the information. This method is the recommended approach. It allows you to manage your content inside a git repository, manage versions, and use it in your automated process.

Here is an example of a JSON file:

{

"SavedSearchId": "NumberofAPICallsPerResource",

"DisplayName": "Number of API calls per resource",

"Category": "API Managment",

"Query": "

// Number of requests

// Count the total number of calls across all APIs in the last 24 hours.

//Total number of call per resource

ApiManagementGatewayLogs

| where TimeGenerated > ago(1d)

| summarize count(CorrelationId) by _ResourceId",

"Version": "1"

}

Now you need to adjust the script accordingly:

$SavedQuery = Get-Content .\NumberofAPICallsPerResource.json | ConvertFrom-Json

$param = @{

ResourceGroupName = "sentinel-rg"

WorkspaceName = "Saggiehaim-Sentinel-WS"

SavedSearchId = $SavedQuery.SavedSearchId

DisplayName = $SavedQuery.DisplayName

Category = $SavedQuery.Category

Query = $SavedQuery.Query

Version = $SavedQuery.Version

Force = $true

}

New-AzOperationalInsightsSavedSearch @param

Hunting Queries

Hunting queries help you find suspicious activity in your environment. While many are likely to return legitimate activity or potentially malicious activity, they can guide your hunting. If you are confident with the results after running these queries, you could consider turning some or all of them into Azure Sentinel Analytics to alert on.

To can create Hunting rules, you can use the `Import-AzSentinelHuntingRule’ cmdlet.

First, you create a JSON file containing your hunting rule base on this schema:

{

"analytics": [

{

"DisplayName": "Example of Hunting Rule",

"Description": "This the description of the query.",

"Query": "

// sample query

Syslog

| limit 10 ",

"Tactics": [

"Persistence",

"Execution"

]

}

]

}

Now, you can import the Hunting Query into your Azure Sentinel:

$Parms = @{

WorkspaceName = "Saggiehaim-Sentinel-WS"

SettingsFile = .\exampleHuntingRule.json

}

Import-AzSentinelHuntingRule @Parms

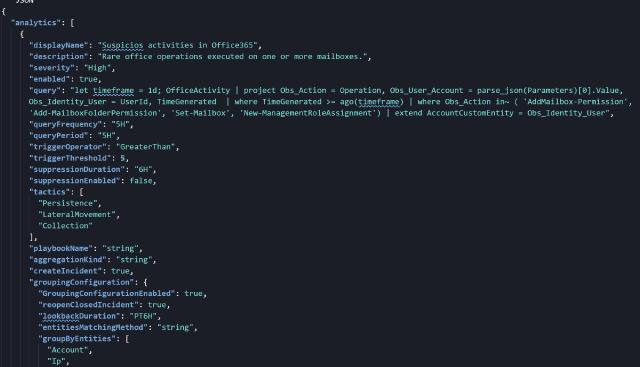

Alerts Rules

Alert rules are queries that defined to trigger incidents. You use them to raise incidents when security incidents happen in your environment. Just like Hunting queries, you store your alerts rules in a JSON file.

{

"analytics": [

{

"displayName": "Suspicios activities in Office365",

"description":

"Rare office operations executed on one or more mailboxes.",

"severity": "High",

"enabled": true,

"query": "let timeframe = 1d; OfficeActivity",

"queryFrequency": "5H",

"queryPeriod": "5H",

"triggerOperator": "GreaterThan",

"triggerThreshold": 5,

"suppressionDuration": "6H",

"suppressionEnabled": false,

"tactics": [

"Persistence",

"LateralMovement",

"Collection"

],

"playbookName": "string",

"aggregationKind": "string",

"createIncident": true,

"groupingConfiguration": {

"GroupingConfigurationEnabled": true,

"reopenClosedIncident": true,

"lookbackDuration": "PT6H",

"entitiesMatchingMethod": "string",

"groupByEntities": [

"Account",

"Ip",

"Host",

"Url"

]

}

}

]

}

You can use the Import-AzSentinelAlertRule to import your Alert Rules:

$Parms = @{

WorkspaceName = "Saggiehaim-Sentinel-WS"

SettingsFile = .\exampleAlertRule.json

}

Import-AzSentinelAlertRule @Parms

Playbooks and Workbooks

Playbooks use Azure Logic Apps to respond to incidents automatically. Logic Apps are a native resource in ARM, and therefore we can automate its deployment with ARM templates.

Azure Sentinel allows you to create custom workbooks across your data. Workbooks visualize and monitor the data and provide versatility in creating custom dashboards.

Same as Playbooks, Workbooks are native resources in Azure and use ARM templates

You can find examples to Playbooks and Workbooks on the Azure Sentinel Community repository

Because this is an ARM template deployment, you deploy it to the Resource group and not to the Log Analytics Workspace.

Use the New-AzResourceGroupDeployment cmdlet to deploy either Workbook or Playbook:

$Parms = @{

ResourceGroupName = "Sentinel-RG"

TemplateFile = .\exampleTemplate.json

}

New-AzResourceGroupDeployment @Parms

Take into account that the deployment will fail if a workbook with the same name already exists.

Plan first, Succeed later

You’ve learned how to provision each component and how to deploy your content, now it’s time to prepare the content and learn how to connect securely to Azure to automate the deployment from start to end correctly.

Folder Structure

First, I want to explain about folder structure. When you have different files, I like to organize them in folders, so it’s easy to manage them and use them in the automation process. In this case, we have five different resources, so, I recommend the following structure:

Sentinel Automation

├───AlertsRules

├───HuntingRules

├───Playbooks

├───SavedQuery

└───Workbooks

The example above allows us to match the right files to the right cmdlets. For example, to import all your AlertRules, you can do the following:

$AlertRules = Get-Item ".\AlertsRules\*" -Filter '*.json'

foreach ($rule in $AlertRules) {

try {

$Parms = @{

WorkspaceName = "Saggiehaim-Sentinel-WS"

SettingsFile = .\exampleAlertRule.json

SubscriptionId = $SubscriptionId

Confirm = $false

}

Import-AzSentinelAlertRule @Parms

}

catch {

$ErrorMessage = $_.Exception.Message

Write-Error "Unable to import Alert Rule: $($ErrorMessage)"

}

}

Connecting Securely To Azure

Another important topic is how we authenticate to Azure securely. If you paid attention when you created a session with Azure for the first time, using your credential, it asked you to sign in with one timed password in the Microsoft portal. One time passwords are not the behavior we want when we automate things, as it required human intervention. But this is also the expected behavior from a security point of view, right?

To overcome this, you need to use an App Registration Account. If you don’t know how to create one, you can follow this guide.

A little tip: Keeping the password in plain text in scripts is not so safe, so it’s better to secure it. The best approach is to use a certificate (in the guide, you will learn how to do it). But if you still want to go without a certificate, you can always protect the password. You convert the password to secure string and save it to a file (I recommend changing the ACL for the file).

$CredsFile = "<Path>\PasswordFile.txt"

Read-Host -AsSecureString | ConvertFrom-SecureString | Out-File $CredsFile

Now you can connect to Azure more securely.

$TenantID = 'XXXX-XXXX-XXXX-XXXX-XXXX'

$SubscriptionID = 'XXXX-XXXX-XXXX-XXXX'

$appId = 'XXXX-XXXX-XXXX-XXXX'

$securePassword = Get-Content $CredsFile | ConvertTo-SecureString

$credential = New-Object System.Management.Automation.PSCredential (

$AppId, $securePassword

)

$connectAzParams = @{

ServicePrincipal = $true

SubscriptionId = $SubscriptionId

Tenant = $TenantId

Credential = $credential

}

try {

Connect-AzAccount @connectAzParams

}

catch {

$ErrorMessage = $_.Exception.Message

Write-Error "Unable to connect to Azure: $($ErrorMessage)"

exit

}

Summary

Azure Sentinel is the “next-gen” SIEM in the cloud, and if you are into security, Mastering Azure Sentinel is a must. Combining the cloud capabilities, with a SIEM that is managed by code, gives you endless possibilities to protect your critical assets. In this post, you have learned about Azure Sentinel, the components, and how to automate a deployment securely. You learned how to sort your content and push it to your Azure Sentinel and manage it with ease. Hopefully, this post will help you start with Azure Sentinel and help automate the deployment and maintenance.